Mediums of Computational Communication

Published:

Preface

I’m currently taking a class on computers and information technology and recently covered machine languages and their evolution. An ongoing discussion at the time was the variou ways machine languages differed from human languages. A lot of points were brought up about the flexibility of human languages (HLs), the rigidity of machine languages (MLs), the use of synonyms, references, metaphors, etc. in HLS, and others.

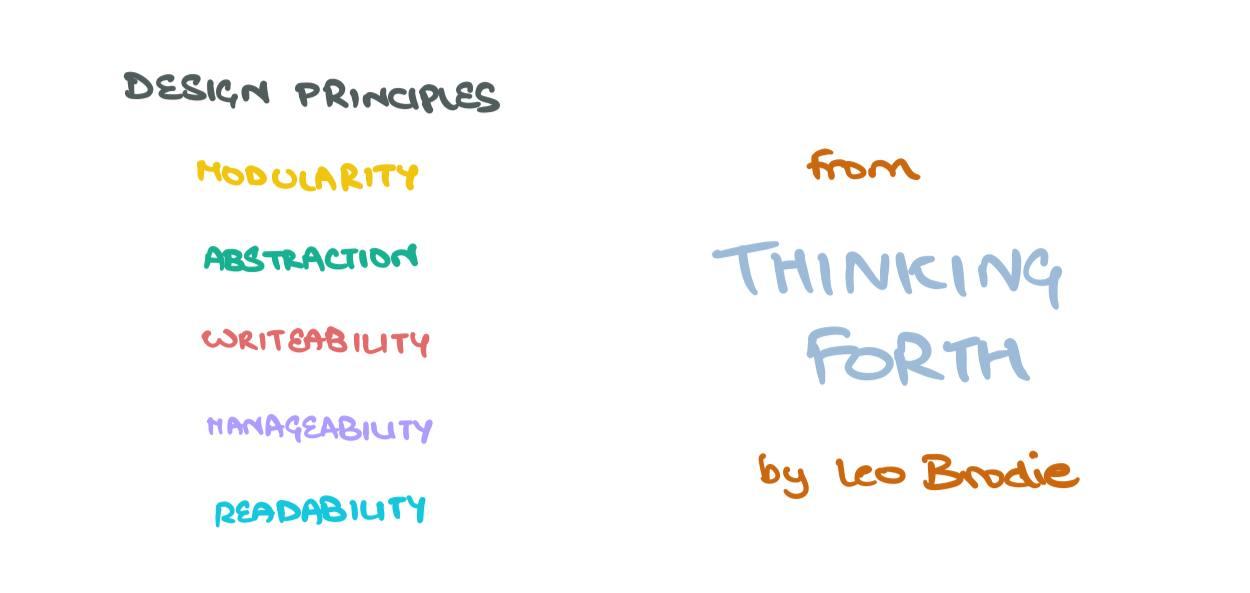

In this post, I wish to highlight three more differences I think are very cool and do a fine job at differentiating the two. They are, to some extent, inspired by the book I’m reading titled “Thinking Forth” by Leo Brodie. FYI, Forth is a popular programming language created by Charles H. Moore and is claimed to have been designed with a lot of forethought and reflection on what languages should be like. MLs are what the computer understands or is provided with; this includes low-level languages like Assembly and high-level languages like Python and Golang. HLs include the language this blogpost was written in (English!) and other languages spoken around the world.

The Differences

Order of Formalisation

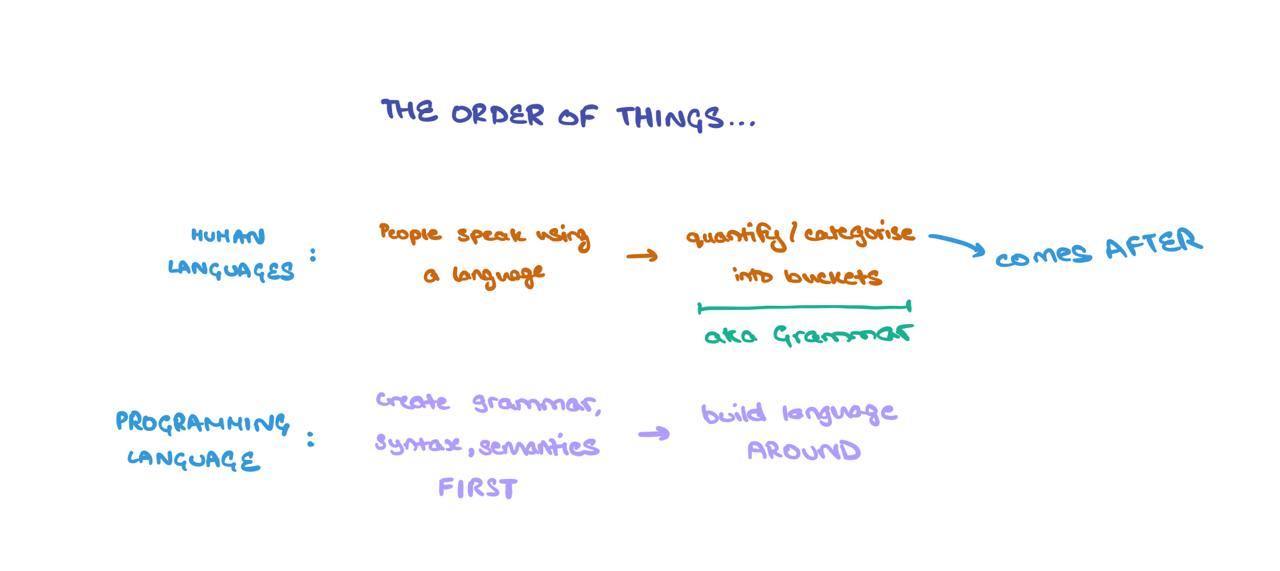

For HLs, the language is created and used first, then the grammar and syntax are invented. A bunch of people (i.e., linguists) come together and formalise a bunch of “rules” that help bucket/quantify the different unit elements of language (eg: words, phrases, the parts of speech, etc.). For MLs, the grammar, semantics, and syntax are devised first, and then the language is built on top of this schematic. Machine language is very derivative in that sense.

Presence of Design Principles

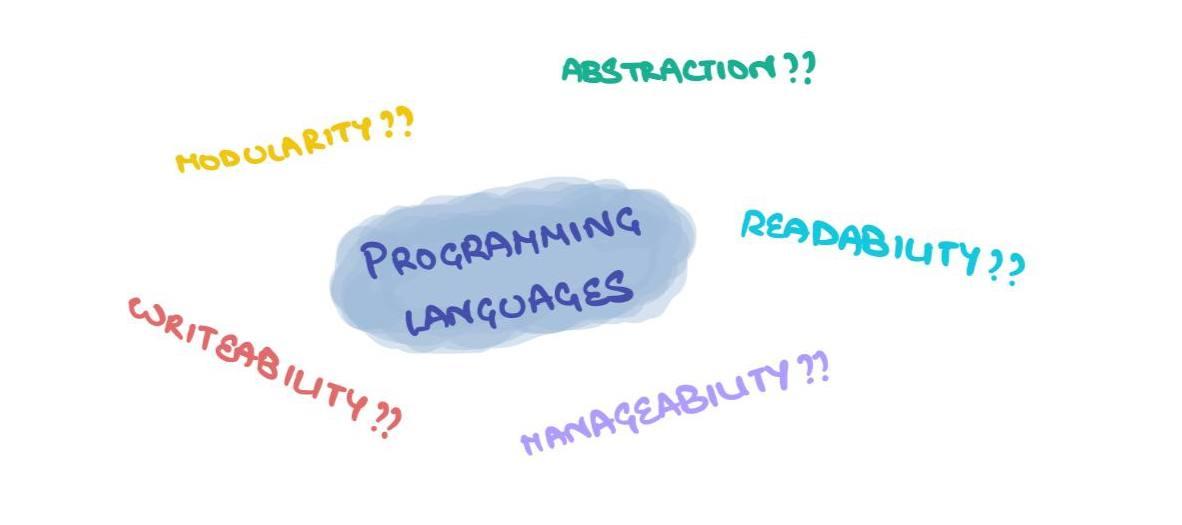

MLs have design principles that guide their construction:

However, HLs do not have such things attached to their creation. No one sat down one day and said “I’m going to create a schematic for a human language that’s so good, everyone’s gonna use it!!!”. It develops naturally the longer a diverse set of people use it. Furthermore, back in the day, languages evolved greatly when they were taken out of the location of origin and spread far and wide. Locals would add their. This isn’t widely seen in MLs – it stays the same no matter where in the world it’s used. Guess it automatically solves the issue of “but it works on my computer!”. This goes back to MLs’ rigidity when it comes to their expression and usage.

Generalisability to Higher-levels

In the book, Brodie talks about how Forth was built using the design principles listed above. Conveniently, these design principles can be generalised to the construction of computer software in general ie. software can be modular, readable, writeable, manageable, or ripe with abstraction. It’s a whole concept of the “creation” (the software built) being constructed using similar principles or considerations as the “medium of creation” (the ML or programming language used).

This doesn’t necessarily work with HLs – the “design principles” of human language cannot be generalised to something higher, broader, or more abstract. One can’t exactly categorise or define these design principles vividly either. As in, what makes one human language “better” than the others? That question is easily answerable when it comes to machine languages (eg: Python > Assembly because it’s more readable and writeable). Also, what constitutes as the creations? Poems, stories, literature in general? In that case, while a poem sounds comprehensive, the same can’t be said about the language it is written in.

In a nutshell

Languages are fascinating: they have evolved from being simple media of communication to running the world of politics, economics, stock markets, and more. Words are charged with meaning and should never be taken at face value, especially not online. Machine Languages have changed over the decades because humans crave for less-rigid ways of sharing ideas and concepts.

“Programming is a medium of communication – not between man and machine, but amongst man himself.” ~ my freshman “Intro to Programming” Professor at NUS

I look forward to how communication with computers changes over the next few years. The improvement in SOTA NLP models suggests the possible usage of natural language to instruct computers on what to do. However, I feel it’s more worthwhile to teach computers to fully understand the nuance of what these commands mean instead of simply translating between natural language and machine code/language (brings the whole Chinese Room Argument into picture).

Thank you to my classmate, Rachelle, for her 5-minute crash course on the history and evolution of language over text!!